Unlocking Remote IoT Batch Jobs On AWS: A Comprehensive Guide

Are you ready to revolutionize how you manage and process your Internet of Things (IoT) data? Remote IoT batch jobs, a powerful and often overlooked technology, are transforming industries by offering unprecedented control and efficiency in handling vast amounts of data from connected devices.

The modern landscape of data management is evolving rapidly, driven by the proliferation of IoT devices. Organizations are generating massive volumes of data from sensors, wearables, and other connected devices. This data, however, is often siloed and difficult to process efficiently. Traditional methods of data handling, such as manual processing, are time-consuming, prone to errors, and cannot scale to meet the demands of today's data-driven world. Remote IoT batch jobs offer a solution by enabling the execution of a series of tasks or operations on IoT devices or data remotely, streamlining data processing, and unlocking valuable insights.

In this article, we will delve into the intricacies of remote IoT batch jobs. We will explore the core concepts, tools, and strategies for effectively setting up and managing these jobs. We will also examine how they are implemented using cloud services like AWS, providing a detailed analysis of remote IoT batch job examples, and offering practical insights and actionable advice. So, buckle up and get ready to harness the power of remote IoT batch jobs. We'll show you how to streamline operations, reduce costs, and enhance productivity.

Understanding Remote IoT Batch Jobs

So, what exactly is a remote IoT batch job? Essentially, it's a process that collects, organizes, and analyzes data in bulk. It's the ability to execute a series of tasks or operations on IoT devices or data from a distance, streamlining data processing and optimizing workflows. Instead of manually handling each device or dataset, remote IoT batch jobs allow for automated processing of large amounts of data, saving time and resources, and reducing the potential for human error.

Remote IoT batch jobs are crucial for several reasons. First and foremost, they offer scalability. As the number of IoT devices continues to grow exponentially, so does the amount of data they generate. Manual processing is simply not feasible at this scale. Remote batch jobs provide the infrastructure to handle large datasets efficiently. Second, they offer automation. Automating the processing of data from IoT devices reduces the need for manual intervention, freeing up valuable human resources and minimizing the potential for errors. Finally, they provide centralized control. By managing batch jobs remotely, organizations can monitor and control data processing from a single point, ensuring consistency and compliance.

Imagine a scenario in a smart city where thousands of sensors collect data on traffic, air quality, and energy consumption. Without a system to process this data in bulk, the raw information would be overwhelming and useless. Remote IoT batch jobs can aggregate this data, identify patterns, and generate actionable insights, informing decisions about traffic flow, pollution control, and energy management. This is just one example of the countless applications of remote IoT batch jobs.

Essential Components of Remote IoT Batch Jobs

To successfully implement a remote IoT batch job, several key components are necessary. These components work in harmony to ensure efficient data processing and management. Understanding these components is crucial to designing and implementing successful IoT batch jobs.

1. Data Acquisition: The first step involves collecting data from the IoT devices. This data can be structured or unstructured, depending on the device and its function. The method of data acquisition may vary depending on the specific devices and their communication protocols, but generally involves the use of APIs, protocols like MQTT or HTTP, or custom data ingestion pipelines. The reliability of this process is critical, as any data loss or corruption at this stage can negatively affect the final results.

2. Data Storage: Once acquired, the data needs to be stored securely and efficiently. Cloud storage solutions like Amazon S3 or Azure Blob Storage are commonly used for their scalability, reliability, and cost-effectiveness. The storage system should be capable of handling large volumes of data and providing quick access for processing. Data should also be organized in a way that facilitates easy retrieval for batch processing.

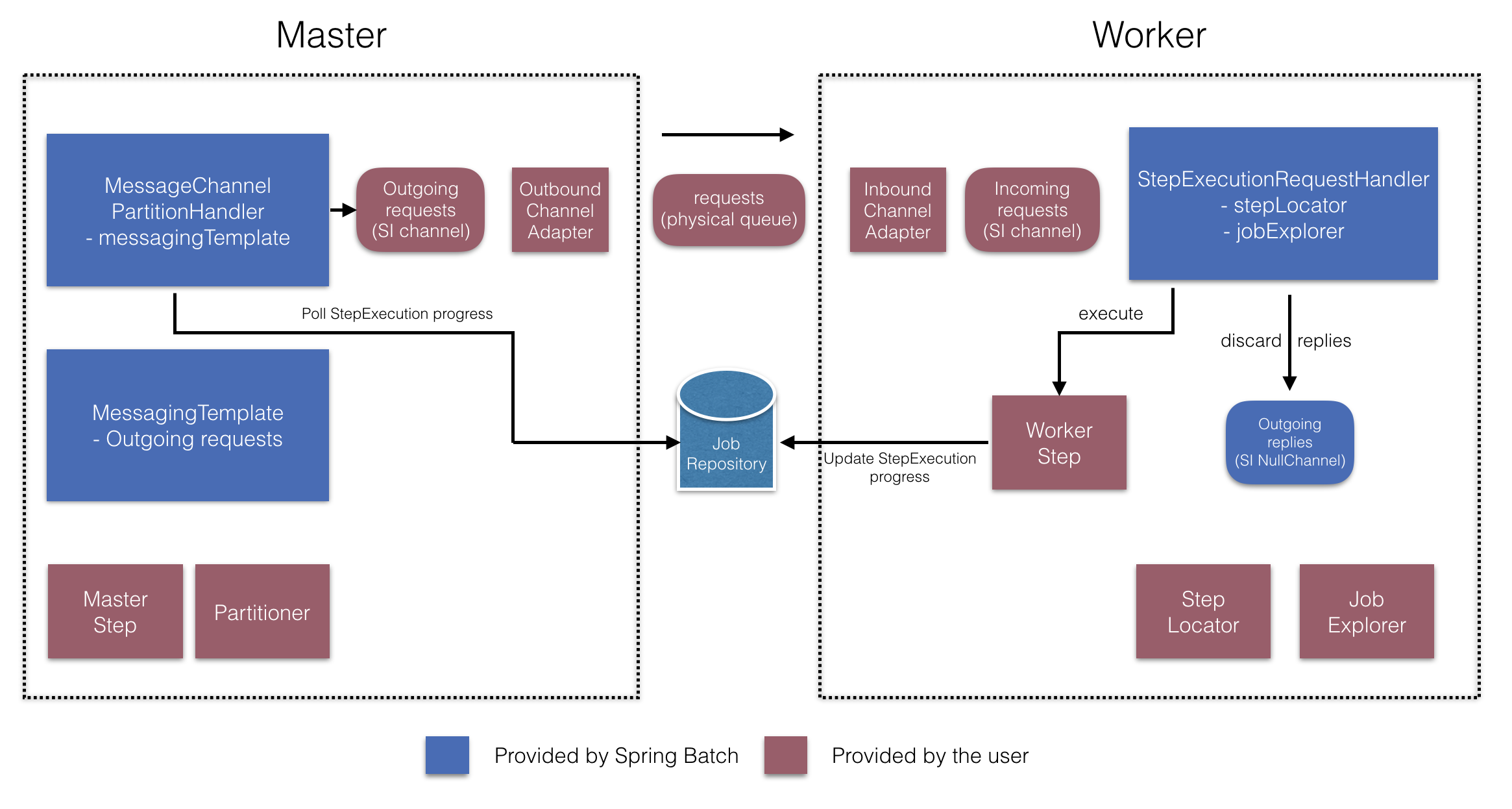

3. Processing Engine: This is the heart of the remote IoT batch job. It's responsible for executing the tasks or operations defined for the data. This can include data cleaning, transformation, aggregation, and analysis. Popular processing engines include Apache Spark, AWS Batch, and custom-built solutions. The processing engine must be able to handle the volume and velocity of data generated by the IoT devices.

4. Scheduling and Orchestration: The scheduling and orchestration component manages when and how the batch jobs are executed. Tools like Apache Airflow or AWS Step Functions are used to schedule and monitor jobs, as well as manage dependencies between tasks. This component ensures that jobs run at the required frequency and that any errors are handled appropriately.

5. Security: Security is paramount in any remote IoT batch job setup. Data must be protected both in transit and at rest. Encryption, access control, and regular security audits are critical to ensure data integrity and prevent unauthorized access. Implementing security best practices helps protect the entire IoT ecosystem.

6. Monitoring and Alerting: To ensure the smooth operation of remote IoT batch jobs, comprehensive monitoring and alerting mechanisms are necessary. This includes tracking job execution, resource utilization, and any errors or failures. Tools like Amazon CloudWatch or Prometheus can be used to monitor these metrics and trigger alerts when anomalies are detected. Effective monitoring provides critical insights into job performance and identifies potential issues before they become critical.

Tools and Strategies for Effective Implementation

Implementing remote IoT batch jobs effectively requires careful planning and the use of appropriate tools and strategies. There are several best practices to consider.

Cloud-Based Services: Leveraging cloud-based services such as AWS, Azure, or Google Cloud Platform provides significant advantages in terms of scalability, cost-effectiveness, and ease of management. These platforms offer a range of services specifically designed for handling IoT data, including data storage, processing, and analysis.

AWS Batch: AWS Batch is a fully managed batch computing service that allows you to run batch jobs on AWS. It automatically provisions the necessary compute resources, manages job scheduling, and monitors job execution. AWS Batch is a great choice for running large-scale, compute-intensive batch jobs.

Containerization (Docker, Kubernetes): Containerization is a critical strategy for ensuring portability and consistency across different environments. Docker allows you to package your code and its dependencies into a container, ensuring that the application runs the same way regardless of the underlying infrastructure. Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications.

Data Pipelines: A well-designed data pipeline is essential for moving data from IoT devices to processing and analysis. The pipeline should be able to handle data ingestion, transformation, and loading (ETL) processes. Data pipelines should also be fault-tolerant and scalable to handle increasing data volumes.

Optimization: Optimization is crucial for ensuring that batch jobs run efficiently. This includes optimizing the processing code, selecting the right hardware, and fine-tuning the scheduling parameters. Regularly reviewing and optimizing the jobs helps ensure that resources are used efficiently.

Version Control: Version control, such as Git, is essential for managing the code used in remote IoT batch jobs. This allows you to track changes, collaborate with others, and revert to previous versions if necessary. Using version control is also essential for continuous integration and continuous deployment (CI/CD).

Remote IoT Batch Job Examples on AWS

AWS provides a comprehensive set of services that make it an ideal platform for implementing remote IoT batch jobs. AWS Batch, in particular, is designed to manage complex workflows efficiently, and it is a core component for many IoT projects. Here are some remote IoT batch job example projects that demonstrate how to harness the power of AWS.

1. Smart Agriculture: This project involves collecting data from sensors deployed in agricultural fields. These sensors measure soil moisture, temperature, and humidity. This data is then processed using AWS Batch to optimize irrigation, fertilizer application, and overall crop management. The processed data provides farmers with valuable insights and actionable recommendations for improving yield and reducing waste.

2. Predictive Maintenance: In this example, data from industrial equipment is used to predict when maintenance is required. Sensors collect data on equipment performance, such as vibration, temperature, and pressure. AWS Batch is used to analyze this data, identify patterns, and predict potential failures. This proactive approach prevents costly downtime and increases the lifespan of equipment.

3. Smart Cities: Data from various city sensors, such as traffic cameras and environmental monitoring stations, is used to analyze real-time conditions. AWS Batch is used to process this data, which allows for effective management of traffic flow, air quality, and other city services. The results enable data-driven decision-making that improves the quality of life for citizens.

4. Energy Consumption Analysis: Data from smart meters is collected to analyze energy consumption patterns. AWS Batch is used to process this data and identify areas where energy savings can be achieved. These insights allow energy companies to optimize resource allocation and help consumers reduce their energy bills.

5. Retail Analytics: Retailers use data from IoT devices, such as point-of-sale systems and in-store sensors, to analyze customer behavior and optimize store layouts. AWS Batch is used to process this data and gain actionable insights into customer preferences and purchasing habits. This information can lead to improved customer experiences and increased sales.

Challenges and Solutions in Remote Batch Job Execution

While remote IoT batch jobs offer numerous benefits, they also come with their own set of challenges. Understanding these challenges and having the right solutions in place is critical for ensuring the success of any project. Here are some of the most common challenges and potential solutions.

Reliable Connectivity: One of the biggest challenges in remote batch job execution is ensuring reliable connectivity. IoT devices are often deployed in remote locations with limited or unreliable network access. To address this, consider the following:

- Employing edge computing, where some processing is done on the device or at the edge of the network. This reduces the need for constant data transfer.

- Implementing data buffering and offline processing so the jobs can still execute and the results are synchronized when the network is restored.

- Utilizing satellite communication or other alternative methods of communication where standard connectivity is not available.

Data Security and Privacy: Protecting sensitive data is paramount. IoT data often contains personal information or sensitive operational details. Here's how to improve security and privacy:

- Implement encryption for data both in transit and at rest.

- Employ access control mechanisms to limit data access to authorized users only.

- Comply with data privacy regulations, such as GDPR and CCPA.

- Regularly audit the security of your IoT ecosystem.

Data Volume and Velocity: Dealing with large volumes of data and high data velocities requires scalable infrastructure. Here's how to optimize your operations:

- Use cloud-based services like AWS, which offer scalability and on-demand resources.

- Optimize data processing pipelines to handle large datasets efficiently.

- Consider using techniques like data sampling or aggregation to reduce data volumes.

Resource Management: Managing compute resources efficiently is essential for cost optimization. Here's how to optimize resource utilization:

- Use auto-scaling to automatically adjust the number of compute resources based on demand.

- Monitor resource utilization and optimize the job configurations.

- Use spot instances or reserved instances to reduce costs.

Job Failure and Recovery: Batch jobs can fail due to various reasons, such as hardware failures or software errors. Having robust error handling and recovery mechanisms is crucial.

- Implement retry mechanisms to automatically re-run failed jobs.

- Use monitoring and alerting to detect and respond to job failures.

- Design jobs that can recover from interruptions, such as by checkpointing progress.

Security in Remote IoT Batch Jobs on AWS

When implementing remote IoT batch jobs with AWS, security is a primary concern. AWS provides a robust set of security features and compliance with industry standards, making it a secure platform for IoT deployments. Here are some key aspects of AWS security.

Encryption: AWS offers advanced encryption capabilities to protect data both in transit and at rest. This includes:

- Encrypting data stored in Amazon S3 and other storage services.

- Using TLS/SSL to encrypt data transmitted between devices and AWS services.

- Employing key management services to securely manage encryption keys.

Access Control: AWS provides robust access control mechanisms to ensure that only authorized users and devices can access data and resources. Key features include:

- Using AWS Identity and Access Management (IAM) to manage user permissions.

- Implementing role-based access control (RBAC) to grant users only the necessary permissions.

- Using network access control lists (ACLs) to control network traffic.

Monitoring and Auditing: AWS provides comprehensive monitoring and auditing capabilities to help you detect and respond to security threats. Key features include:

- Using Amazon CloudWatch to monitor system performance and security metrics.

- Using AWS CloudTrail to log all API calls and changes to your AWS resources.

- Performing regular security audits to identify and address vulnerabilities.

Compliance: AWS complies with numerous industry standards and regulations, providing a secure environment for your IoT deployments. Compliance certifications include:

- ISO 27001, ISO 27017, and ISO 27018.

- SOC 1, SOC 2, and SOC 3.

- HIPAA and PCI DSS.

Best Practices for AWS Security: Here are some best practices to enhance the security of your AWS-based remote IoT batch jobs.

- Use the principle of least privilege when granting IAM permissions.

- Regularly update your AWS infrastructure with the latest security patches.

- Enable multi-factor authentication (MFA) for all IAM users.

- Use security groups to control network traffic.

- Monitor your AWS environment for security threats.

The Future of Remote IoT Batch Jobs

The future of remote IoT batch jobs is bright, with significant advancements on the horizon. As technology continues to evolve, we can expect the following.

Artificial Intelligence and Machine Learning: Integration of AI and ML is expected to revolutionize how data is processed and insights are generated. This includes automated data analysis, predictive maintenance, and anomaly detection, leading to more efficient and intelligent IoT systems.

Edge Computing: Edge computing, where data is processed closer to the source, will become more prevalent. This will reduce latency, improve reliability, and enable real-time insights. Edge computing also enhances security by reducing the amount of data transmitted over the network.

Increased Automation: Automation will play a more significant role in all aspects of IoT data processing, from data acquisition to analysis and insights generation. This reduces the need for manual intervention and improves efficiency.

Enhanced Data Security: Advancements in security technologies will ensure the integrity and privacy of IoT data. This includes more sophisticated encryption, access control, and threat detection mechanisms.

Integration with Blockchain: Blockchain technology offers enhanced security and transparency. Integrating blockchain with IoT data processing provides a secure and tamper-proof ledger for tracking and verifying data.

5G and Beyond: The advent of 5G and beyond will provide faster and more reliable connectivity, allowing for the development of more complex and data-intensive IoT applications. This will significantly impact the speed and efficiency of remote IoT batch jobs.

As technology continues to evolve, the power and efficiency of remote IoT batch jobs will only increase. The capacity to handle massive datasets, derive valuable insights, and automate complex workflows will continue to drive innovation in various industries. Staying up-to-date with the latest trends and best practices will be essential for success. The future of data processing is here, and remote IoT batch jobs are at the forefront of this exciting evolution.